王树森Transformer学习笔记

Transformer

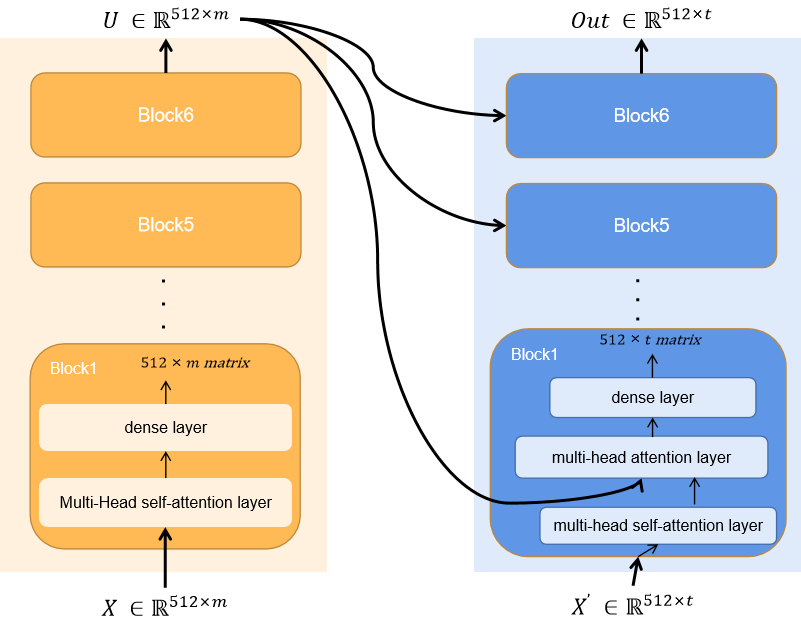

Transformer是完全由Attention和Self-Attention结构搭建的深度神经网络结构。

其中最为重要的就是Attention和Self-Attention结构。

Attention结构

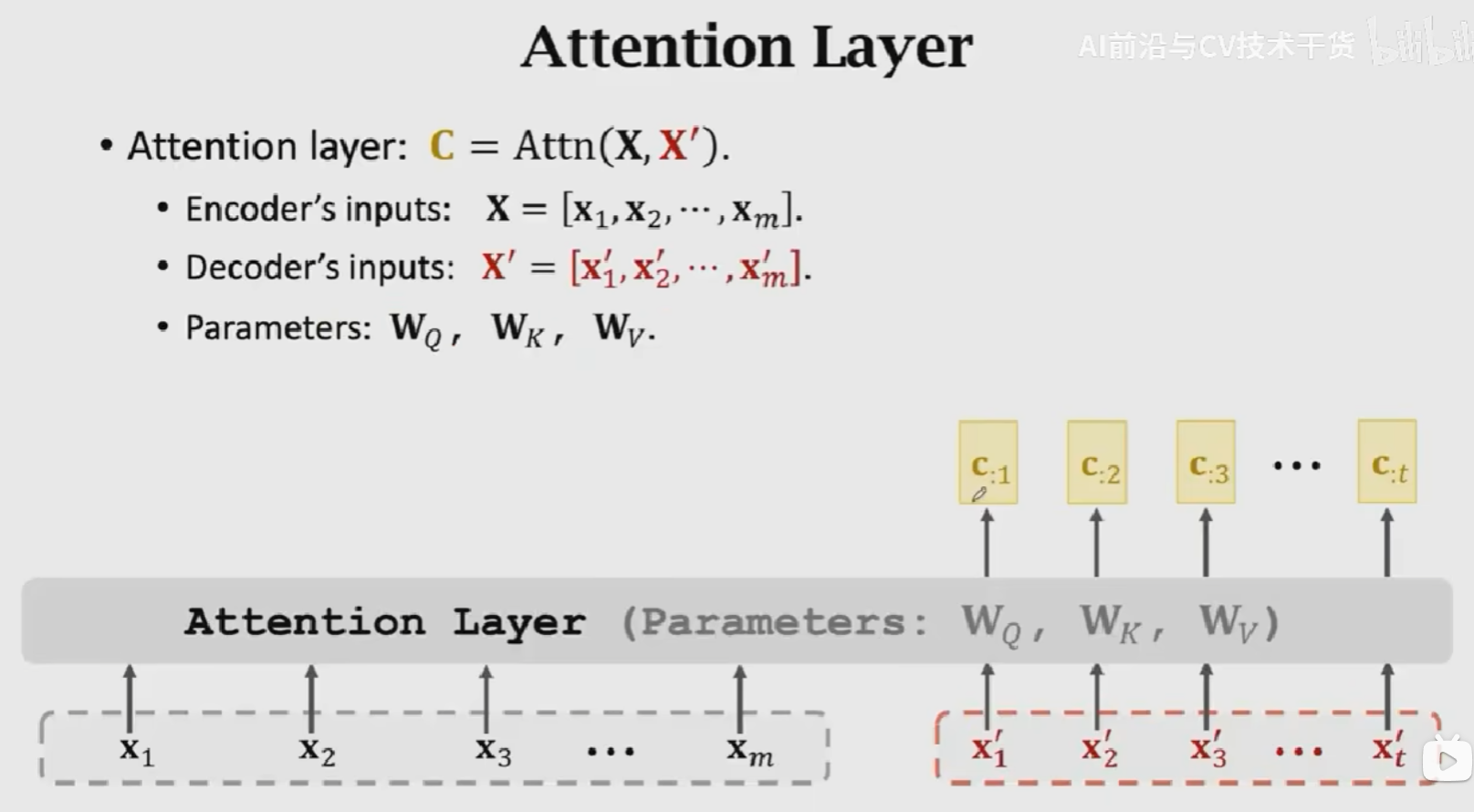

Attention Layer接收两个输入

\(X = [x_1, x_2, x_3, ..., x_m]\)

,Decoder的输入为

\(X' = [x_1^{'}, x_2^{'}, x_3^{'}, ...,x_t^{'}]\)

,得到一个输出

\(C = [c_1, c_2, c_3, ..., c_t]\)

,包含三个参数:

\(W_Q, W_K, W_V\)

。

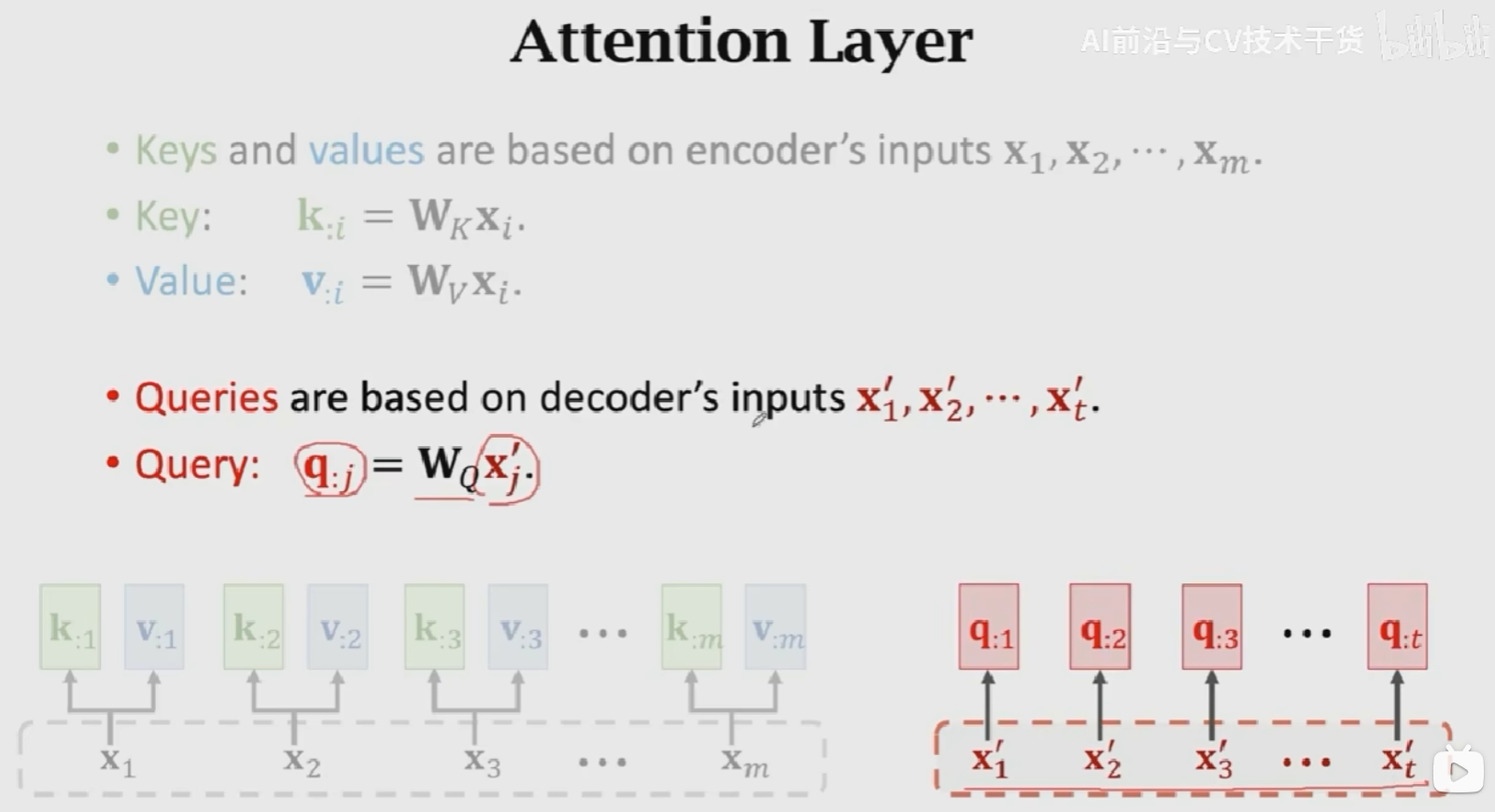

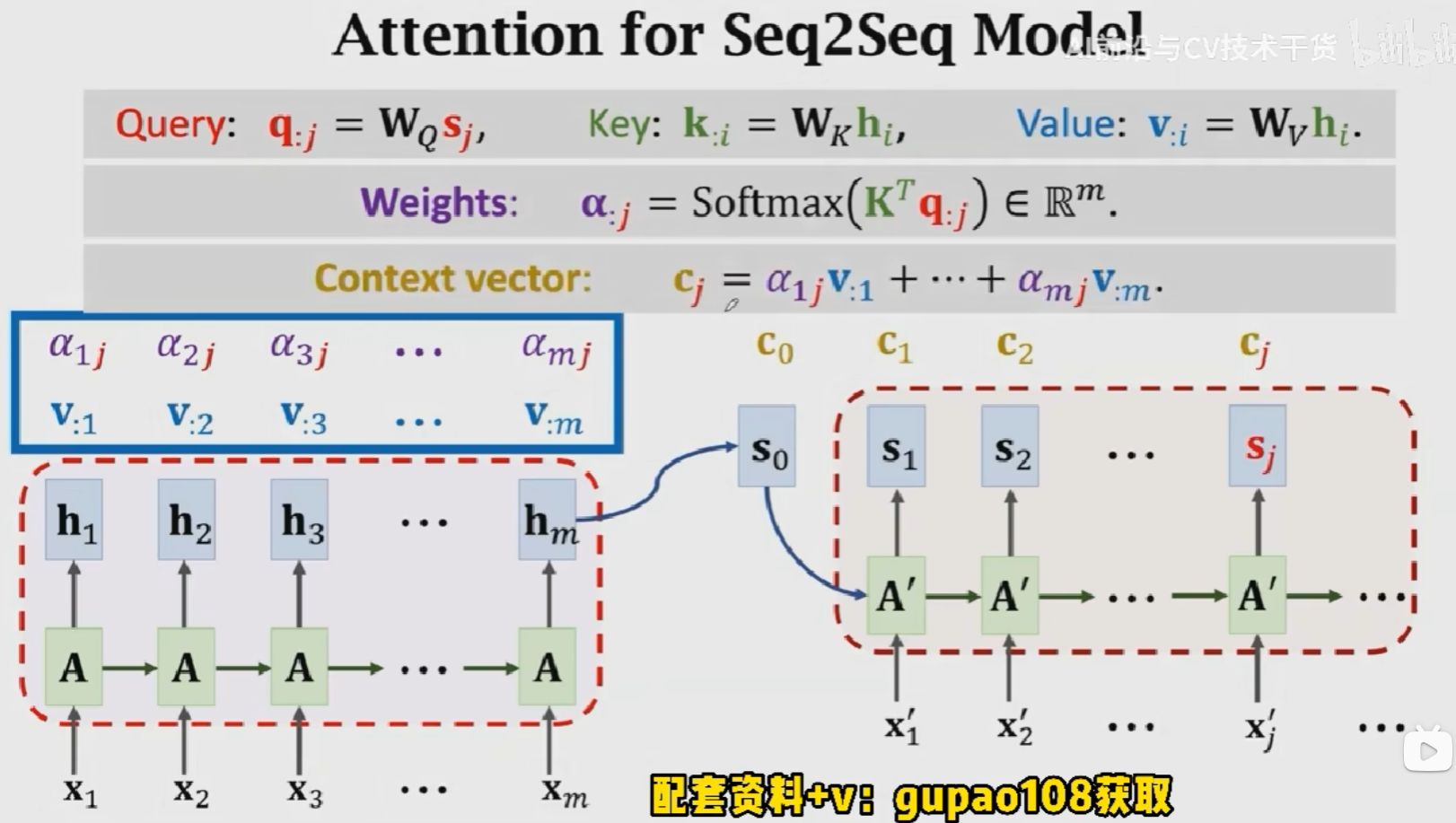

具体的计算流程为:

- 首先,使用Encoder的输入来计算Key和Value向量,得到m个k向量和v向量:

\(k_{:i} = W_Kx_{:i}, v_{:i} = W_vx_{:i}\) - 然后,对Decoder的输入做线性变换,得到t个q向量:

\(q_{:j} = W_Qx_{:j}^{'}\) - 计算权重:

\(\alpha_{:1} = Softmax(K^Tq_{:1})\) - 计算Context vector:

\(c_{:1} = \alpha_{11}v_{:1} + \alpha_{21}v_{:2} + ...\alpha_{m1}v_{:m} = V\alpha_{:1} = VSoftmax(K^Tq_{:1})\) - 用相同的方式计算

\(c_2, c_3, ..., c_t\)

,得到

\(C = [c_1, c_2, ..., c_t]\)

Key:表示待匹配的值,Query表示查找值,这m个

\(\alpha_{:j}\)

就说明是query(

\(q_j\)

)和所有key(

\([k_{:1}, k_{:2}, ..., k_{:m}]\)

)之间的匹配程度。匹配程度越高,权重越大。V是对输入的一个线性变化,使用权重对其进行加权平均得到相关矩阵

\(C\)

。在Attention+RNN的结构中,是对输入状态进行加权平均,这里

\(V\)

相当于对

\([h_1, h_2, ..., h_m]\)

进行线性变换。

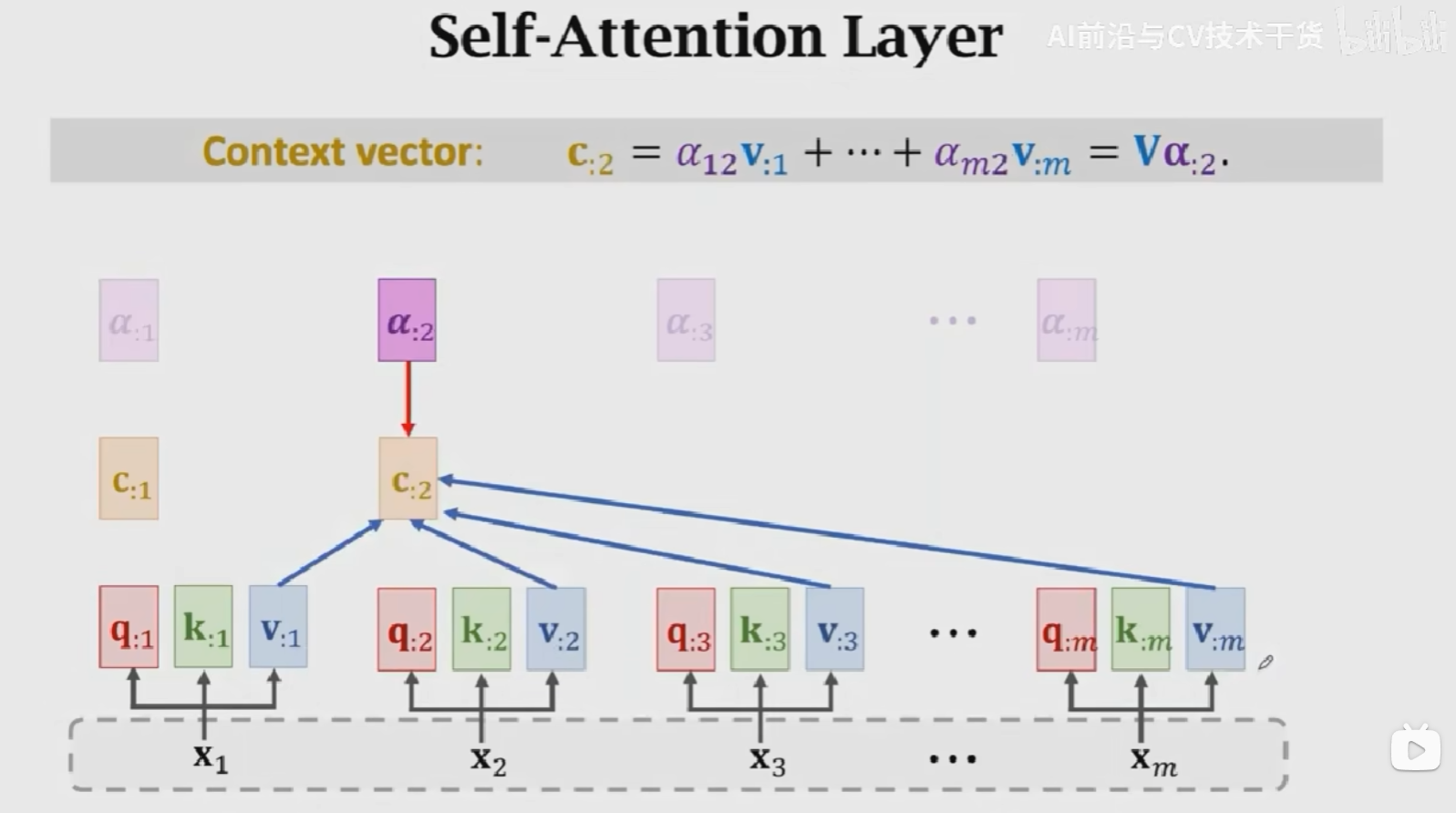

Self-Attention结构

Attention结构接收两个输入得到一个输出,Self-Attention结构接收一个输入得到一个输出,如下图所示。中间的计算过程与Attention完全一致。

Multi-head Self-Attention

上述的Self-Attention结构被称为单头Self-Attention(Single-Head Self-Attention)结构,Multi-Head Self-Attention就是将多个Single-Head Self-Attention的结构进行堆叠,结果Concatenate到一块儿。

假如有

\(l\)

个Single-Head Self-Attention组成一个Multi-Head Self-Attention,Single-Head Self-Attention的输入为

\(X = [x_{:1}, x_{:2}, x_{:3}, ..., x_{:m}]\)

,输出为

\(C = [c_{:1}, c_{:2}, c_{:3}, ..., c_{:m}]\)

维度为

\(dm\)

,

则,Multi-Head Self-Attention的输出维度为

\((ld)*m\)

,参数量为

\(l\)

个

\(W_Q, W_K, W_V\)

即

\(3l\)

个参数矩阵。

Multi-Head Attention操作一致,就是进行多次相同的操作,将结果Concatenate到一块儿。

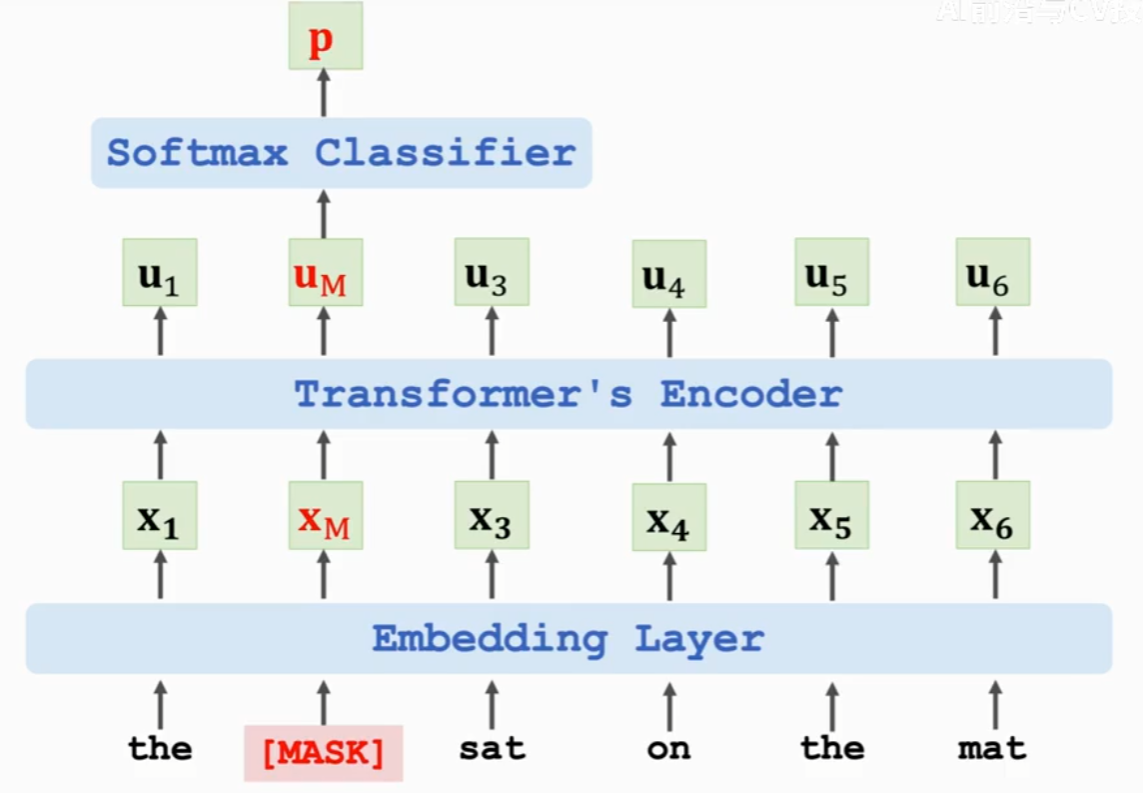

BERT:Bidirectional Encoder Representations from Transformers

BERT的提出是为了预训练Transformer的Encoder网络【BERT[4] is for pre-training Transformer's[3] encoder.】,通过两个任务(1)预测被遮挡的单词(2)预测下一个句子,这两个任务不需要人工标注数据,从而达到使用海量数据训练超级大模型的目的。

BERT有两种任务:

- Task 1: Predict the masked word,预测被遮挡的单词

输入:the [MASK] sat on the mat

groundTruth:cat

损失函数:交叉熵损失

- Task 2: Predict the next sentence,预测下一个句子,判断两句话在文中是否真实相邻

输入:[CLS, first sentence, SEP, second sentence]

输出:true or false

损失函数:交叉熵损失

这样做二分类可以让Encoder学习并强化句子之间的相关性。

好处:

- BERT does not need manually labeled data. (Nice, Manual labeling is expensive.)

- Use large-scale data, e.g., English Wikipedia (2.5 billion words)

- task 1: Randomly mask works(with some tricks)

- task 2: 50% of the next sentences are real. (the other 50% are fake.)

- BERT将上述两个任务结合起来预训练Transformer模型

- 想法简单且非常有效

消耗极大【普通人玩不起,但是BERT训练出来的模型参数是公开的,可以拿来使用】:

- BERT Base

- 110M parameters

- 16 TPUs, 4 days of training

- BERT Large

- 235M parameters

- 64 TPUs, 4days of training

Summary

Transformer:

- Transformer is a Seq2Seq model, it has an encoder and a decoder

- Transformer model is not RNN

- Transfomer is purely based on attention and dense layers(全连接层)

- Transformer outperforms all the state-of-the-art RNN models

Attention的发展:

- Attention was originally developed for Seq2Seq RNN models[1].

- Self-Attention: attention for all the RNN models(not necessarily for Seq2Seq models)[2].

- Attention can be used without RNN[3].

Reference

王树森的Transformer模型

[1] Bahdanau, Cho, & Bengio,

Neural machine translation by jointly learning to align and translate

. In

ICLR

, 2015.

[2] Cheng, Dong, & Lapata. Long Short-Term Memory-Networks for Machine Reading. In

EMNLP

, 2016.

[3] Vaswani et al.

Attention Is All You Need

. In

NIPS

, 2017.

[4] Devlin, Chang, Lee, and Toutanova. BERT: Pre-training of deep bidirectional transformers for language understanding. In

ACL

, 2019.