基于DPDK抓包的Suricata安装部署

一、背景

Suricata支持网卡在线抓包和离线读取PCAP包两种形式的抓包:

- 离线抓包天然具有速度慢、非实时的特点

- 在线捕获数据包又包括常规网卡抓包、PF_RING和DPDK的方式

由于项目分光的流量较大, 软件自带的抓包方式并不能满足需求,因此采用了基于DPDK的Suricata在线捕获网卡数据包的方式。

二、 服务器配置与对应软件版本

操作系统:Centos7.6 1810内核版本:3.10.0-1160.88.1.el7.x86_64

网卡信息:Intel X722 万兆光口以太网卡

DPDK版本:dpdk-19.11.14Suricata版本:DPDK_Suricata-4.1.4

三、部署安装DPDK

基于DPDK抓包的Suricata版本只更新到4.1.4,因此对DPDK版本有要求,经过测试推荐使用

DPDK-19.11.14

DPDK官网下载地址:

http://fast.dpdk.org/rel/dpdk-19.11.14.tar.gz

1、安装dpdk-19.11.14需要操作系统内核版本大于3.2,如果版本过低,可以通过以下方式升级

1 [root@ids-dpdk ~]# cat /etc/redhat-release2 CentOS Linux release 7.6.1810(Core)3 [root@ids-dpdk ~]# uname -r #查看一下系统版本4 3.10.0-957.el7.x86_645 [root@ids-dpdk ~]# rpm -qa kernel #通过rpm命令查看我所安装的内核版本6 kernel-3.10.0-957.el7.x86_647 [root@ids-dpdk ~]# ls /usr/src/kernels/#查看有没有相应的内核开发包8空 #如果该目录下没有系统内核源码,执行以下操作9 [root@ids-dpdk ~]# yum install kernel-devel #安装内核头文件后10 [root@ids-dpdk ~]# ls /usr/src/kernels/ 11 3.10.0-1160.88.1.el7.x86_6412#两个版本号不一致,进行升级并重启13 [root@ids-dpdk ~]# yum -y update kernel kernel-devel14[root@ids-dpdk ~]# reboot15#再此查看,版本号一致,问题解决16 [root@ids-dpdk ~]# ls /usr/src/kernels/ 17 3.10.0-1160.88.1.el7.x86_6418 [root@ids-dpdk ~]# uname -r19 3.10.0-1160.88.1.el7.x86_64

2、安装依赖包

sudo yum install -y gcc make

sudo yum install-y libpcap libpcap-devel

sudo yum install-y numactl numactl-devel

sudo yum install -y pciutils

3、从dpdk官网下载dpdk压缩包并解压

将dpdk压缩包下载到/home目录下并解压

wget http://fast.dpdk.org/rel/dpdk-19.11.14.tar.gz tar -zxvf dpdk-19.11.14.tar.gz

4、DPDK编译和网卡绑定

1. 设置环境变量,命令行执行:

[root@ids-dpdk ~]# export RTE_SDK='/home/dpdk-19.11.14'[root@ids-dpdk ~]# export RTE_TARGET=x86_64-native-linuxapp-gcc

#(对于64位机用这个命令,对于32位机用i686-native-linuxapp-gcc)2. 查看环境变量是否设置好:

[root@ids-dpdk ~]# env |grep RTE

RTE_SDK=/home/dpdk-stable-19.11.14RTE_TARGET=x86_64-native-linuxapp-gcc3. 关闭要绑定的网卡,否则绑定dpdk时不成功

ifconfig ens1f0 down4. 进入到dpdk-19.11.14/usertools目录下

cd/home/dpdk-19.11.14/usertools

执行./dpdk-setup.sh

会输出一列可选操作:------------------------------------------------------------------------------RTE_SDK exportedas /home/dpdk-stable-19.11.14 ------------------------------------------------------------------------------ ----------------------------------------------------------Step1: Select the DPDK environment to build----------------------------------------------------------[1] arm64-armada-linuxapp-gcc

[2] arm64-armada-linux-gcc

[3] arm64-armv8a-linuxapp-clang

[4] arm64-armv8a-linuxapp-gcc

[5] arm64-armv8a-linux-clang

[6] arm64-armv8a-linux-gcc

[7] arm64-bluefield-linuxapp-gcc

[8] arm64-bluefield-linux-gcc

[9] arm64-dpaa-linuxapp-gcc

[10] arm64-dpaa-linux-gcc

[11] arm64-emag-linuxapp-gcc

[12] arm64-emag-linux-gcc

[13] arm64-graviton2-linuxapp-gcc

[14] arm64-graviton2-linux-gcc

[15] arm64-n1sdp-linuxapp-gcc

[16] arm64-n1sdp-linux-gcc

[17] arm64-octeontx2-linuxapp-gcc

[18] arm64-octeontx2-linux-gcc

[19] arm64-stingray-linuxapp-gcc

[20] arm64-stingray-linux-gcc

[21] arm64-thunderx2-linuxapp-gcc

[22] arm64-thunderx2-linux-gcc

[23] arm64-thunderx-linuxapp-gcc

[24] arm64-thunderx-linux-gcc

[25] arm64-xgene1-linuxapp-gcc

[26] arm64-xgene1-linux-gcc

[27] arm-armv7a-linuxapp-gcc

[28] arm-armv7a-linux-gcc

[29] graviton2

[30] i686-native-linuxapp-gcc

[31] i686-native-linuxapp-icc

[32] i686-native-linux-gcc

[33] i686-native-linux-icc

[34] ppc_64-power8-linuxapp-gcc

[35] ppc_64-power8-linux-gcc

[36] x86_64-native-bsdapp-clang

[37] x86_64-native-bsdapp-gcc

[38] x86_64-native-freebsd-clang

[39] x86_64-native-freebsd-gcc

[40] x86_64-native-linuxapp-clang

[41] x86_64-native-linuxapp-gcc

[42] x86_64-native-linuxapp-icc

[43] x86_64-native-linux-clang

[44] x86_64-native-linux-gcc

[45] x86_64-native-linux-icc

[46] x86_x32-native-linuxapp-gcc

[47] x86_x32-native-linux-gcc----------------------------------------------------------Step2: Setup linux environment----------------------------------------------------------[48] Insert IGB UIO module

[49] Insert VFIO module

[50] Insert KNI module

[51] Setup hugepage mappings for non-NUMA systems

[52] Setup hugepage mappings forNUMA systems

[53] Display current Ethernet/Baseband/Crypto device settings

[54] Bind Ethernet/Baseband/Crypto device to IGB UIO module

[55] Bind Ethernet/Baseband/Crypto device to VFIO module

[56] Setup VFIO permissions----------------------------------------------------------Step3: Run test application forlinux environment----------------------------------------------------------[57] Run test application ($RTE_TARGET/app/test)

[58] Run testpmd application in interactive mode ($RTE_TARGET/app/testpmd)----------------------------------------------------------Step4: Other tools----------------------------------------------------------[59] List hugepage info from /proc/meminfo----------------------------------------------------------Step5: Uninstall and system cleanup----------------------------------------------------------[60] Unbind devices fromIGB UIO or VFIO driver

[61] Remove IGB UIO module

[62] Remove VFIO module

[63] Remove KNI module

[64] Remove hugepage mappings

[65] Exit Script

Option:

在最下面的 Option: 处输入41会选择适合x86_64机器的gcc编译器,如果是其他架构的机器,需要选择对应的其他编译器

编译中,稍等片刻......

编译完成后会出现

Build complete [x86_64-native-linuxapp-gcc]

Installation cannot run with T defined and DESTDIR undefined------------------------------------------------------------------------------RTE_TARGET exportedas x86_64-native-linuxapp-gcc------------------------------------------------------------------------------Press enter tocontinue...

按下回车键,继续选择其他操作:

Option:48加载igb uio模块

Unloading any existing DPDK UIO module

Loading DPDK UIO module

Press enter tocontinue...

按下回车键,继续选择其他操作:

Option:52配置大页

Removing currently reserved hugepages

Unmounting/mnt/huge and removing directory

Input the number of 2048kB hugepagesforeach node

Example: to have 128MB of hugepages available per nodeina 2MB huge page system,

enter'64' to reserve 64 *2MB pages on each node

Number of pagesfor node0: 1024(在此输入1024)

Reserving hugepages

Creating/mnt/huge and mounting ashugetlbfs

Press enter tocontinue...

按下回车键,继续选择其他操作:

Option:53列出所有网卡

Network devicesusingkernel driver=================================== 0000:17:00.0 'MT27800 Family [ConnectX-5] 1017' if=ens5f0 drv=mlx5_core unused=igb_uio *Active* 0000:17:00.1 'MT27800 Family [ConnectX-5] 1017' if=ens5f1 drv=mlx5_core unused=igb_uio *Active* 0000:33:00.0 'Ethernet Connection X722 for 10GbE SFP+ 0dda' if=ens1f0 drv=i40e unused=igb_uio0000:33:00.1 'Ethernet Connection X722 for 10GbE SFP+ 0dda' if=ens1f1 drv=i40e unused=igb_uio

No'Baseband'devices detected==============================No'Crypto'devices detected============================No'Eventdev'devices detected==============================No'Mempool'devices detected=============================No'Compress'devices detected==============================No'Misc (rawdev)'devices detected===================================Press enter tocontinue...

按下回车键,继续选择其他操作:

Option:54绑定网卡

Network devicesusingkernel driver=================================== 0000:17:00.0 'MT27800 Family [ConnectX-5] 1017' if=ens5f0 drv=mlx5_core unused=igb_uio *Active* 0000:17:00.1 'MT27800 Family [ConnectX-5] 1017' if=ens5f1 drv=mlx5_core unused=igb_uio *Active* 0000:33:00.0 'Ethernet Connection X722 for 10GbE SFP+ 0dda' if=ens1f0 drv=i40e unused=igb_uio0000:33:00.1 'Ethernet Connection X722 for 10GbE SFP+ 0dda' if=ens1f1 drv=i40e unused=igb_uio

No'Baseband'devices detected==============================No'Crypto'devices detected============================No'Eventdev'devices detected==============================No'Mempool'devices detected=============================No'Compress'devices detected==============================No'Misc (rawdev)'devices detected===================================Enter PCI address of device to bind to IGB UIO driver:0000:33:00.0(在此输入网卡pci号,就是上边列出来的网卡信息)

成功后会输出:

ok

Press enter tocontinue...

按下回车键,继续选择其他操作:

Option:53查看网卡绑定情况

Network devicesusing DPDK-compatible driver============================================ 0000:33:00.0 'Ethernet Connection X722 for 10GbE SFP+ 0dda' drv=igb_uio unused=i40e

Network devicesusingkernel driver=================================== 0000:17:00.0 'MT27800 Family [ConnectX-5] 1017' if=ens5f0 drv=mlx5_core unused=igb_uio *Active* 0000:17:00.1 'MT27800 Family [ConnectX-5] 1017' if=ens5f1 drv=mlx5_core unused=igb_uio *Active* 0000:33:00.1 'Ethernet Connection X722 for 10GbE SFP+ 0dda' if=enp51s0f1 drv=i40e unused=igb_uio

No'Baseband'devices detected==============================No'Crypto'devices detected============================No'Eventdev'devices detected==============================No'Mempool'devices detected=============================No'Compress'devices detected==============================No'Misc (rawdev)'devices detected===================================Press enter tocontinue...

按下回车键,继续选择其他操作:

Option:57进行简单测试:

Enter hex bitmask of cores to execute test app on

Example: to execute app on cores0 to 7, enter 0xffbitmask:0xff(在此输入 0xff)

Launching app

EAL: Detected40lcore(s)

EAL: Detected2NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode'PA'EAL: No available hugepages reportedin hugepages-1048576kB

EAL: Probing VFIO support...

EAL: PCI device0000:00:04.0 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.1 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.2 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.3 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.4 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.5 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.6 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.7 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:3d:00.0 on NUMA socket 0EAL: probe driver:8086:37d3 net_i40e

i40e_GLQF_reg_init(): i40e device0000:3d:00.0 changed global register [0x002689a0]. original: 0x0000002a, new: 0x00000029i40e_GLQF_reg_init(): i40e device0000:3d:00.0 changed global register [0x00268ca4]. original: 0x00002826, new: 0x00009420EAL: PCI device0000:3d:00.1 on NUMA socket 0EAL: probe driver:8086:37d3 net_i40e

EAL: PCI device0000:80:04.0 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.1 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.2 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.3 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.4 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.5 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.6 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.7 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

APP: HPETis not enabled, using TSC as defaulttimer

输出以上内容表示安装dpdk成功

RTE>>quit(在此输入quit)

Press enter tocontinue....

按下回车键,继续选择其他操作:

Option:58进行抓包测试

Enter hex bitmask of cores to execute testpmd app on

Example: to execute app on cores0 to 7, enter 0xffbitmask:7(输入 7)

Launching app

EAL: Detected40lcore(s)

EAL: Detected2NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode'PA'EAL: No available hugepages reportedin hugepages-1048576kB

EAL: Probing VFIO support...

EAL: PCI device0000:00:04.0 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.1 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.2 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.3 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.4 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.5 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.6 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:00:04.7 on NUMA socket 0EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:3d:00.0 on NUMA socket 0EAL: probe driver:8086:37d3 net_i40e

EAL: PCI device0000:3d:00.1 on NUMA socket 0EAL: probe driver:8086:37d3 net_i40e

EAL: PCI device0000:80:04.0 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.1 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.2 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.3 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.4 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.5 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.6 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

EAL: PCI device0000:80:04.7 on NUMA socket 1EAL: probe driver:8086:2021rawdev_ioat

Interactive-mode selected

testpmd: create anew mbuf pool <mbuf_pool_socket_0>: n=163456, size=2176, socket=0testpmd: preferred mempool ops selected: ring_mp_mc

Warning! port-topology=paired and odd forward ports number, the last port will pair with itself.

Configuring Port0 (socket 0)

Port0: F0:10:90:7E:FF:03Checking link statuses...

Done

testpmd>start (输入start开始抓包)

io packet forwarding- ports=1 - cores=1 - streams=1 -NUMA support enabled, MP allocation mode: native

Logical Core1 (socket 0) forwards packets on 1streams:

RX P=0/Q=0 (socket 0) -> TX P=0/Q=0 (socket 0) peer=02:00:00:00:00:00io packet forwarding packets/burst=32nb forwarding cores=1 - nb forwarding ports=1port0: RX queue number: 1 Tx queue number: 1Rx offloads=0x0 Tx offloads=0x10000RX queue:0RX desc=256 - RX free threshold=32RX threshold registers: pthresh=0 hthresh=0 wthresh=0RX Offloads=0x0TX queue:0TX desc=256 - TX free threshold=32TX threshold registers: pthresh=32 hthresh=0 wthresh=0TX offloads=0x10000 - TX RS bit threshold=32testpmd>stop (输入stop停止抓包)

Telling cores to stop...

Waitingforlcores to finish...---------------------- Forward statistics for port 0 ----------------------RX-packets: 2685 RX-dropped: 0 RX-total: 2685TX-packets: 2686 TX-dropped: 0 TX-total: 2686 ---------------------------------------------------------------------------- +++++++++++++++ Accumulated forward statistics for all ports+++++++++++++++RX-packets: 2685 RX-dropped: 0 RX-total: 2685TX-packets: 2686 TX-dropped: 0 TX-total: 2686 ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++Done.

testpmd>quit(在此输入quit)

Stopping port0...

Stopping ports...

Done

Shutting down port0...

Closing ports...

Done

Bye...

Press enter tocontinue...

按下回车键,继续选择其他操作:

Option:65退出安装程序,至此,dpdk安装成功。

5、DPDK_Suricata编译与安装

1、安装依赖

[root@ids-dpdk ~]# yum -y install gcc libpcap-devel pcre-devel libyaml-devel file-devel \

zlib-devel jansson-devel nss-devel libcap-ng-devel libnet-devel tar make openssl openssl-devel \

libnetfilter_queue-devel lua-devel PyYAML libmaxminddb-devel rustc cargo librdkafka-devel \

lz4-devel libxml2 autoconf2、下载DPDK_Suricata安装包

##目前DPDK_Suricata项目作者已经到4.1.4,只是目录仍沿用4.1.1,直接克隆项目代码即可

[root@ids-dpdk ~]# git clone https://github.com/vipinpv85/DPDK_SURICATA-4_1_1 3、编译并安装DPDK_Suricata

进入DPDK_Suricata目录下:

[root@ids-dpdk ~]# cd /home/DPDK_SURICATA-4_1_1/suricata-4.1.4构建支持DPDK的配置脚本:

[root@ids-dpdk suricata-4.1.4]# autoconf

使用dpdk进行配置:

[root@ids-dpdk suricata-4.1.4]# ./configure --prefix=/usr --sysconfdir=/etc --localstatedir=/var --enable-dpdk

编译DPDK_Suricata:

[root@ids-dpdk suricata-4.1.4]# make

安装DPDK_Suricata:

[root@ids-dpdk suricata-4.1.4]# make install

更新规则文件:

[root@ids-dpdk suricata-4.1.4]# make install-full

安装相关配置文件:

[root@ids-dpdk suricata-4.1.4]# make install-conf

6、测试运行抓包

修改配置文件suricata.yaml

[root@ids-dpdk ~]# vim /etc/suricata/suricata.yaml

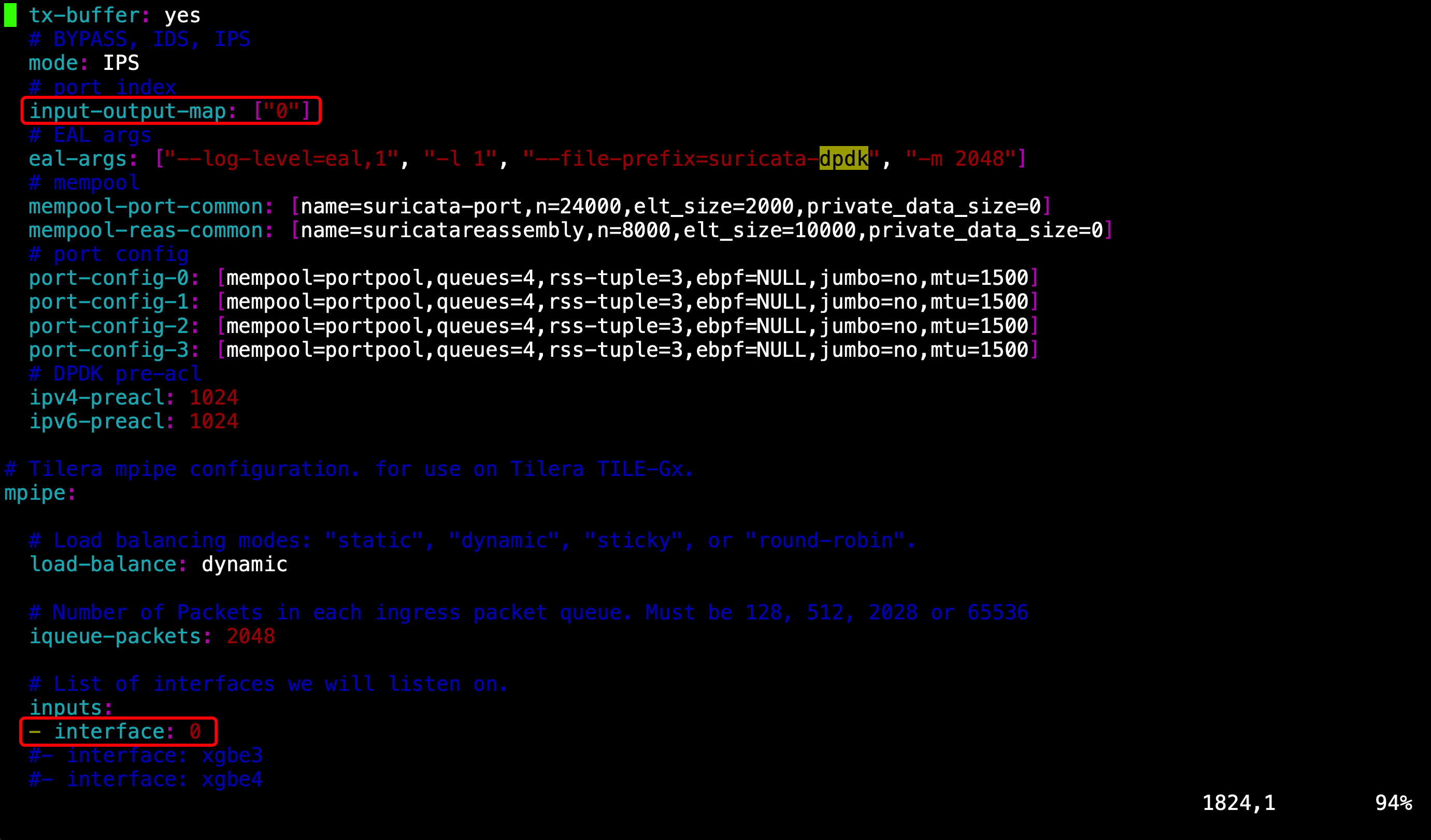

搜索 DPDK关键词,修改input-output-map和interface两处参数值

将input-output-map修改成:input-output-map: ["0"]

将interface修改成:- interface: 0修改完成后执行 :wq 保存并退出

测试运行:

[root@ids-dpdk ~]# suricata

如果报以下错误,是库文件未找到的原因/usr/bin/suricata: error while loading shared libraries: libhtp.so.2: cannot open shared objectfile: No such file or directory

执行命令手动建立到libhtp.so.2的软链接

[root@ids-dpdk ~]# ln -s /usr/lib/libhtp.so.2 /lib64/libhtp.so.2然后执行命令开始运行dpdk抓包测试

[root@ids-dpdk ~]# suricata --dpdk22/3/2023 -- 15:36:51 - <Notice> - --26. (protocol-ff)22/3/2023 -- 15:36:51 - <Notice> - --27. (protocol-ff)22/3/2023 -- 15:36:51 - <Notice> - --28. (protocol-ff)22/3/2023 -- 15:36:51 - <Notice> - --29. (protocol-ff)22/3/2023 -- 15:36:51 - <Notice> - --30. (protocol-ff)22/3/2023 -- 15:36:51 - <Notice> - --31. (protocol-ff)22/3/2023 -- 15:36:51 - <Notice> - addr_dst_match4_cnt 1 addr_src_match4_cnt 1 addr_dst_match6_cnt i1 addr_src_match6_cnt 1 22/3/2023 -- 15:36:51 - <Notice> -IPV422/3/2023 -- 15:36:51 - <Notice> - 0:ffffffff22/3/2023 -- 15:36:51 - <Notice> - 0:ffffffff22/3/2023 -- 15:36:51 - <Notice> - ----------------------- 22/3/2023 -- 15:36:51 - <Notice> -IPV622/3/2023 -- 15:36:51 - <Notice> - 0-0-0-0:ffffffff-ffffffff-ffffffff-ffffffff22/3/2023 -- 15:36:51 - <Notice> - 0-0-0-0:ffffffff-ffffffff-ffffffff-ffffffff22/3/2023 -- 15:36:51 - <Notice> - ----------------------- 22/3/2023 -- 15:36:51 - <Notice> -Source Port22/3/2023 -- 15:36:51 - <Notice> - port:port2 (0:ffff)22/3/2023 -- 15:36:51 - <Notice> -Destiantion Port22/3/2023 -- 15:36:51 - <Notice> - port:port2 (0:ffff)22/3/2023 -- 15:36:51 - <Notice> - prio 3 22/3/2023 -- 15:36:51 - <Notice> - Port 0 RX-q (4) hence trying RSS22/3/2023 -- 15:36:51 - <Notice> - rss_hf 3ef8, rss_key_len 0 22/3/2023 -- 15:36:52 - <Notice> - all 4 packet processing threads, 4management threads initialized, engine started.

至此,DPDK_Suricata开启DPDK抓包

7、DPDK脚本补充

由于服务器开关机会导致DPDK绑定的网卡会被默认解绑,为简化重新机械的绑定工作,通过shell脚本实现自动化DPDK绑定网卡。

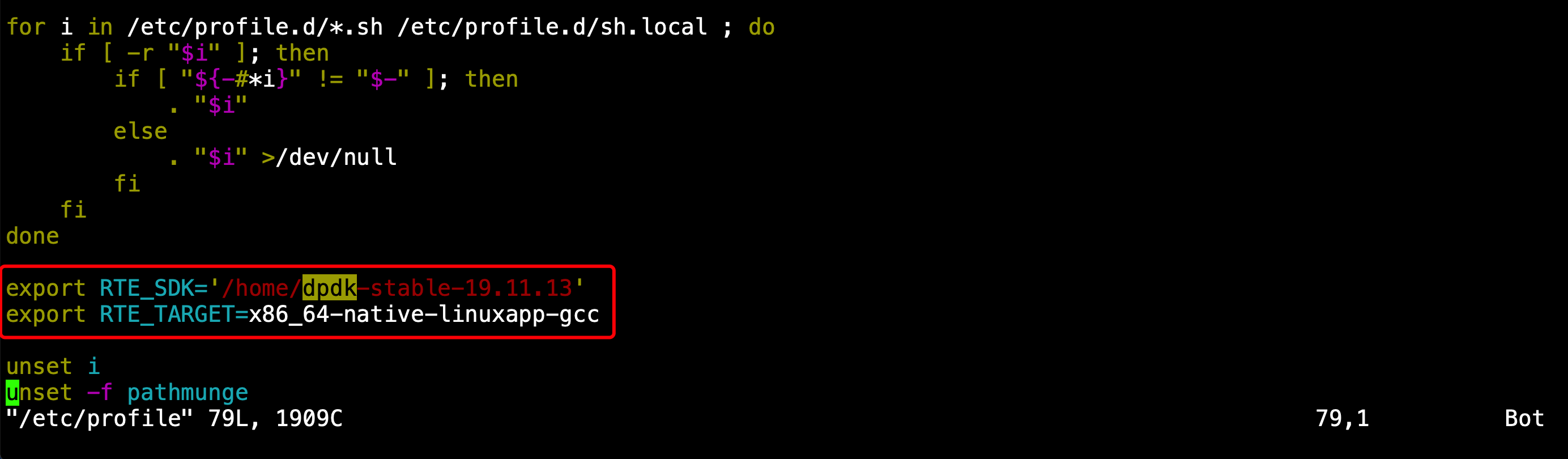

编辑/etc/profile,设置环境变量

在done和unset i之间添加环境变量,执行:wq保存并退出,然后执行source使之生效

[root@ids-dpdk ~]# vim /etc/profile

添加内容:

export RTE_SDK='/home/dpdk-stable-19.11.14'

export RTE_TARGET=x86_64-native-linuxapp-gcc

[root@ids-dpdk ~]# source /etc/profile

创建DPDK绑定脚本dpdk-bind.sh,并赋予执行权限

[root@ids-dpdk usertools]# vim dpdk-bind.sh

[root@ids-dpdk usertools]# chmod -R 775 dpdk-bind.sh

dpdk-bind.sh脚本内容如下,根据自己需要修改网卡名称和路径信息

#!/bin/sh

#网卡名

uio=ens1f0

#需要绑定的驱动类型igb_uio或者vfio-pci

pci_type=igb_uio

#挂载驱动

modprobe uio

insmod/home/dpdk-stable-19.11.14/x86_64-native-linuxapp-gcc/kmod/igb_uio.ko

#关闭网卡

ifconfig $uio down

#绑定网卡到igb_uio

python/home/dpdk-stable-19.11.14/usertools/dpdk-devbind.py --bind=$pci_type $uio

设置大页存储,将DPDK绑定脚本加入开机启动项中,编辑/etc/rc.local,根据自己需求修改路径等信息

#!/bin/bash

# THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES

#

# Itishighly advisable to create own systemd services or udev rules

# to run scripts during boot instead ofusing thisfile.

#

# In contrast to previous versions due to parallel execution during boot

#thisscript will NOT be run after all other services.

#

# Please note that you must run'chmod +x /etc/rc.d/rc.local'to ensure

# thatthisscript will be executed during boot.

touch/var/lock/subsys/local

#设置大页存储

echo1024 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

mkdir/mnt/huge > /dev/null 2>&1mount-t hugetlbfs nodev /mnt/huge

#网卡绑定DPDK

sh/home/dpdk-stable-19.11.14/usertools/dpdk-bind.sh > /dev/null 2>&1

编辑完成后,执行:wq保存并退出