记一次python写爬虫爬取学校官网的文章

有一位老师想要把官网上有关数字化的文章全部下载下来,于是找到我,使用python来达到目的

首先先查看了文章的网址

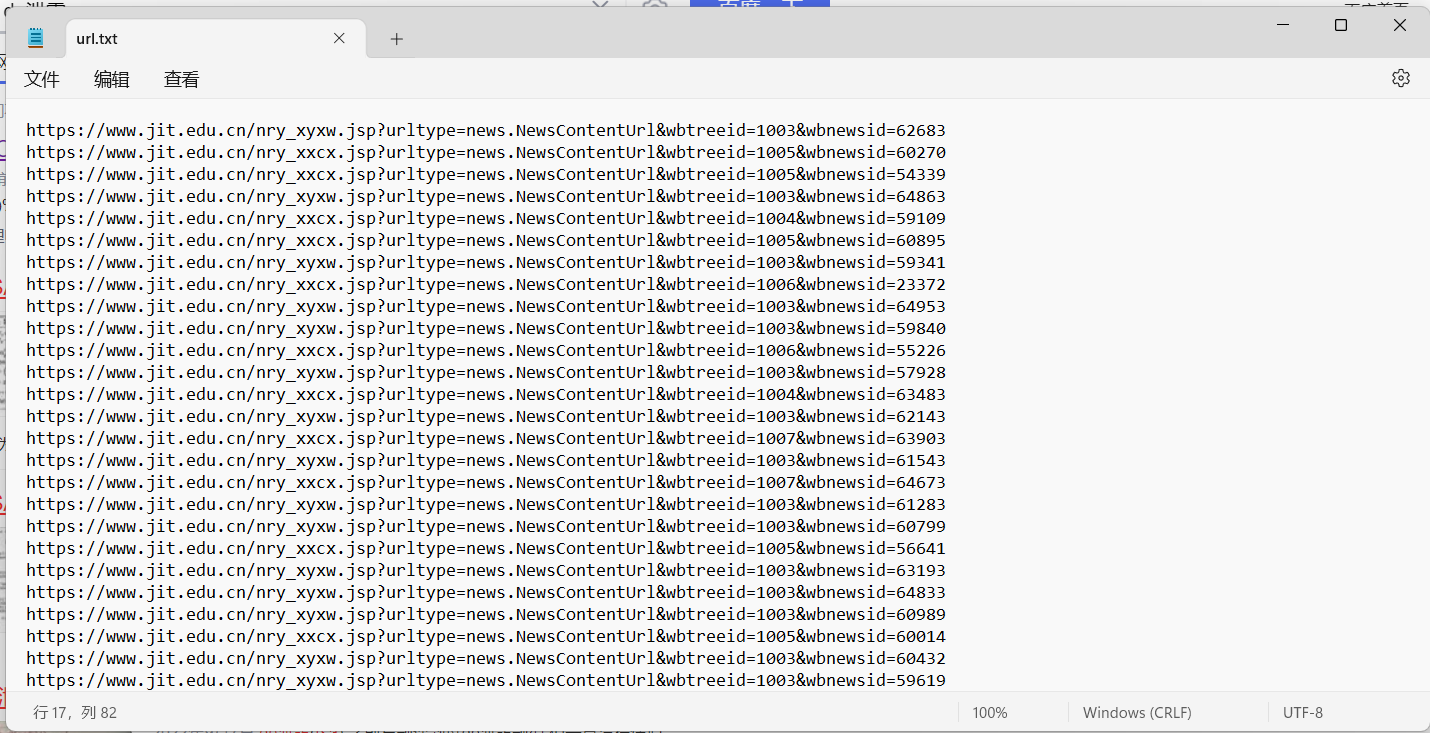

获取了网页的源代码发现一个问题,源代码里面没有url,这里的话就需要用到抓包了,因为很明显这里显示的内容是进行了一个请求,所以只能通过抓包先拿到请求的url从而获得每一篇文章对应的url,获取到了之后使用python全部下载到了一个文本文件中

这时候我们就拿到了所有文章的链接,接下来写函数实现获取网页源代码,这里用到了python爬虫常用的BeautifulSoup处理源代码很方便以下是实现的代码:

defhtml(url):

head={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.131 Safari/537.36 Edg/92.0.902.67","cookie": "Hm_lvt_af43f8d0f4624bbf72abe037042ebff4=1640837022; __gads=ID=a34c31647ad9e765-22ab388e9bd6009c:T=1637739267:S=ALNI_MYCjel4B8u2HShqgmXs8VNhk1NFuw; __utmc=66375729; __utmz=66375729.1663684462.1.1.utmcsr=(direct)|utmccn=(direct)|utmcmd=(none); __gpi=UID=000004c822cf58b2:T=1649774466:RT=1663684463:S=ALNI_Ma3kL14WadtyLP-_lSQquhy_w85ag; __utma=66375729.1148601284.1603116839.1663684462.1663687392.2; .Cnblogs.AspNetCore.Cookies=CfDJ8NfDHj8mnYFAmPyhfXwJojexiKc4NcOPoFywr0vQbiMK4dqoay5vz8olTO_g9ZwQB7LGND5BBPtP2AT24aKeO4CP01olhQxu4EsHxzPVjGiKFlwdzRRDSWcwUr12xGxR89b_HFIQnmL9u9FgqjF6CI8canpEYxvgxZlNjSlBxDcWOzuMTVqozYVTanS-vAUSOZvdUz8T2XVahf8CQIZp6i3JzSkaaGUrXzEAEYMnyPOm5UnDjXcxAW00qwVmfLNW9XO_ITD7GVLrOg-gt7NFWHE29L9ejbNjMLECBdvHspokli6M78tCC5gmdvetlWl-ifnG5PpL7vNNFGYVofGfAZvn27iOXHTdHlEizWiD83icbe9URBCBk4pMi4OSRhDl4Sf9XASm7XKY7PnrAZTMz8pvm0ngsMVaqPfCyPZ5Djz1QvKgQX3OVFpIvUGpiH3orBfr9f6YmA7PB-T62tb45AZ3DB8ADTM4QcahO6lnjjSEyBVSUwtR21Vxl0RsguWdHJJfNq5C5YMp4QS0BfjvpL-OvdszY7Vy6o2B5VCo3Jic; .CNBlogsCookie=71474A3A63B98D6DA483CA38404D82454FB23891EE5F8CC0F5490642339788071575E9E95E785BF883C1E6A639CD61AC99F33702EF6E82F51D55D16AD9EBD615D26B40C1224701F927D6CD4F67B7375C7CC713BD; _ga_3Q0DVSGN10=GS1.1.1663687371.1.1.1663687557.1.0.0; Hm_lvt_866c9be12d4a814454792b1fd0fed295=1662692547,1663250719,1663417166,1663687558; Hm_lpvt_866c9be12d4a814454792b1fd0fed295=1663687558; _ga=GA1.2.1148601284.1603116839; _gid=GA1.2.444836177.1663687558; __utmt=1; __utmb=66375729.11.10.1663687392"}

response= requests.get(url, headers=head) #获取网页信息 response.encoding = 'utf-8' #html = response.text #所有内容 content =response.content.decode()#匹配文章标题 pattern2 = r'"pageTitle" content="(.*?)">'match2=re.search(pattern2, content)#标题 bt = match2.group(1)

soup= BeautifulSoup(content,'html.parser')#内容 nr=soup.get_text()

write(bt,nr)

伪造一个header的头,因为学校官网设置的有简易的反爬机制,所以需要伪装成正常的浏览器访问,写一个简单的正则匹配文章的标题作为txt的文件名

现在拿到了标题和文章内容就可以写入文本了

创建文本文件并写入内容代码:

defwrite(bt,nr):

with open(r'C:\Users\13777\Desktop\猜猜看\1\\'+bt+'.txt','w',encoding='utf-8') as f:

f.write(nr)

with open(r'C:\Users\13777\Desktop\猜猜看\1\\'+bt+'.txt','r',encoding='utf-8') as f:

lines=f.readlines()#切片方法,从第4行开始,到倒数第2行结束 new_lines = lines[67:-1]

with open(r'C:\Users\13777\Desktop\猜猜看\1\\'+bt+'.txt','w',encoding='utf-8') as f:

f.writelines(new_lines)print('yes')

with open(r'C:\Users\13777\Desktop\猜猜看\url.txt') as t:for line int.readlines():

url=line.strip()

html(url)

这里遇到一个问题就是经过BeautifulSoup处理后的内容前面有一段是没有任何作用的文本,于是写入文本再进行切片把前面没有用处的文本去掉,剩下的都是文章的内容

最终实现的效果: